vue实现人脸识别

Vue 实现人脸识别的方法

使用现成的人脸识别库(如face-api.js)

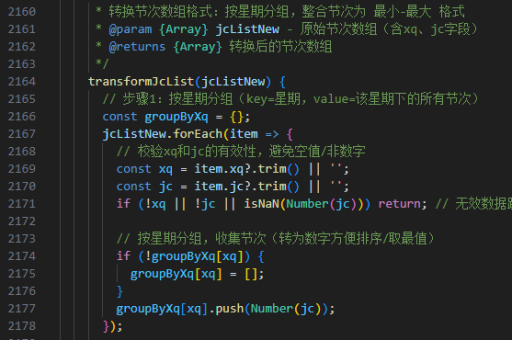

face-api.js 是一个基于 TensorFlow.js 的 JavaScript 人脸识别库,支持人脸检测、识别和特征点标记等功能。在 Vue 项目中可以轻松集成。

安装 face-api.js:

npm install face-api.js在 Vue 组件中使用:

<template>

<div>

<video ref="video" width="600" height="450" autoplay muted></video>

<canvas ref="canvas" width="600" height="450"></canvas>

</div>

</template>

<script>

import * as faceapi from 'face-api.js';

export default {

mounted() {

this.loadModels();

this.startVideo();

},

methods: {

async loadModels() {

await faceapi.nets.tinyFaceDetector.loadFromUri('/models');

await faceapi.nets.faceLandmark68Net.loadFromUri('/models');

await faceapi.nets.faceRecognitionNet.loadFromUri('/models');

},

async startVideo() {

const video = this.$refs.video;

if (navigator.mediaDevices && navigator.mediaDevices.getUserMedia) {

const stream = await navigator.mediaDevices.getUserMedia({ video: true });

video.srcObject = stream;

this.detectFaces();

}

},

async detectFaces() {

const video = this.$refs.video;

const canvas = this.$refs.canvas;

const displaySize = { width: video.width, height: video.height };

faceapi.matchDimensions(canvas, displaySize);

setInterval(async () => {

const detections = await faceapi.detectAllFaces(

video,

new faceapi.TinyFaceDetectorOptions()

).withFaceLandmarks();

const resizedDetections = faceapi.resizeResults(detections, displaySize);

canvas.getContext('2d').clearRect(0, 0, canvas.width, canvas.height);

faceapi.draw.drawDetections(canvas, resizedDetections);

faceapi.draw.drawFaceLandmarks(canvas, resizedDetections);

}, 100);

}

}

};

</script>使用浏览器原生 API(如WebRTC和Shape Detection API)

现代浏览器提供了 WebRTC 用于获取摄像头视频流,以及实验性的 Shape Detection API 用于人脸检测。

示例代码:

<template>

<div>

<video ref="video" autoplay playsinline></video>

<canvas ref="canvas"></canvas>

</div>

</template>

<script>

export default {

mounted() {

this.initFaceDetection();

},

methods: {

async initFaceDetection() {

const video = this.$refs.video;

const stream = await navigator.mediaDevices.getUserMedia({ video: true });

video.srcObject = stream;

const faceDetector = new FaceDetector();

const canvas = this.$refs.canvas;

const context = canvas.getContext('2d');

const detect = async () => {

try {

const faces = await faceDetector.detect(video);

context.clearRect(0, 0, canvas.width, canvas.height);

context.drawImage(video, 0, 0, canvas.width, canvas.height);

faces.forEach(face => {

context.strokeStyle = '#FF0000';

context.lineWidth = 2;

context.strokeRect(

face.boundingBox.x,

face.boundingBox.y,

face.boundingBox.width,

face.boundingBox.height

);

});

} catch(e) {

console.error(e);

}

requestAnimationFrame(detect);

};

detect();

}

}

};

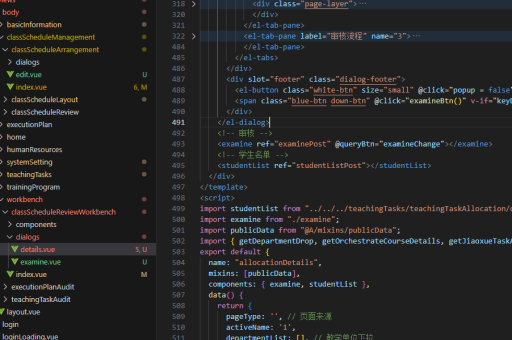

</script>使用第三方服务(如AWS Rekognition或Azure Face API)

对于更高级的人脸识别需求,可以集成云服务提供商的人脸识别API。

AWS Rekognition 示例:

<template>

<div>

<input type="file" @change="detectFaces" accept="image/*">

<img :src="imageUrl" v-if="imageUrl">

<canvas ref="canvas" v-if="imageUrl"></canvas>

</div>

</template>

<script>

import AWS from 'aws-sdk';

export default {

data() {

return {

imageUrl: null

};

},

methods: {

detectFaces(event) {

const file = event.target.files[0];

this.imageUrl = URL.createObjectURL(file);

const rekognition = new AWS.Rekognition({

region: 'us-west-2',

credentials: new AWS.Credentials({

accessKeyId: 'YOUR_ACCESS_KEY',

secretAccessKey: 'YOUR_SECRET_KEY'

})

});

const params = {

Image: {

Bytes: file

},

Attributes: ['ALL']

};

rekognition.detectFaces(params, (err, data) => {

if (err) {

console.error(err);

return;

}

this.drawFaceBoxes(data.FaceDetails);

});

},

drawFaceBoxes(faceDetails) {

const canvas = this.$refs.canvas;

const img = new Image();

img.onload = () => {

canvas.width = img.width;

canvas.height = img.height;

const ctx = canvas.getContext('2d');

ctx.drawImage(img, 0, 0);

faceDetails.forEach(face => {

const box = face.BoundingBox;

ctx.strokeStyle = '#FF0000';

ctx.lineWidth = 2;

ctx.strokeRect(

box.Left * canvas.width,

box.Top * canvas.height,

box.Width * canvas.width,

box.Height * canvas.height

);

});

};

img.src = this.imageUrl;

}

}

};

</script>注意事项

确保在本地开发时使用 HTTPS 或 localhost,因为大多数浏览器要求安全上下文才能访问摄像头。

对于生产环境,考虑用户隐私问题,明确告知用户摄像头使用目的并获得同意。

人脸识别模型文件可能较大,首次加载需要时间,考虑显示加载状态提升用户体验。